|

Hi there! I am an AI Researcher broadly interested in Deep Learning and Neuromorphic Engineering. Currently, I have joined Qualcomm as AI Systems Engineer.

Graduated from the University of California, Santa Cruz.

|

|

|

My research interests broadly are and but is not restricted to:

|

|

|

|

|

|

ONNX Model Comparator A Visual Studio Code extension for comparing ONNX graphs, providing insights into model differences and analysis. |

|

Qtron A lightweight VS Code extension for viewing and analyzing ONNX models with seamless onnx_tool integration. |

|

|

|

sconce: E2E AutoML Model Compression/Inference Package A one-stop solution for Model Compression, from NAS, Pruning, Quantization, Layer Fusion, Sparse Engine to CUDA optimizations for Inference Optimizations. |

|

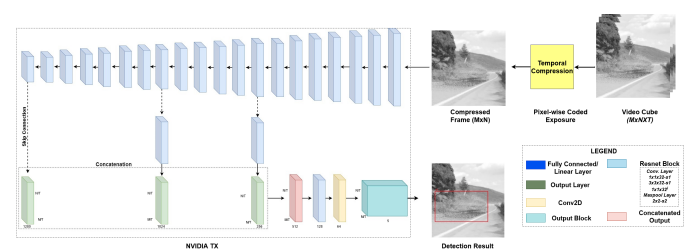

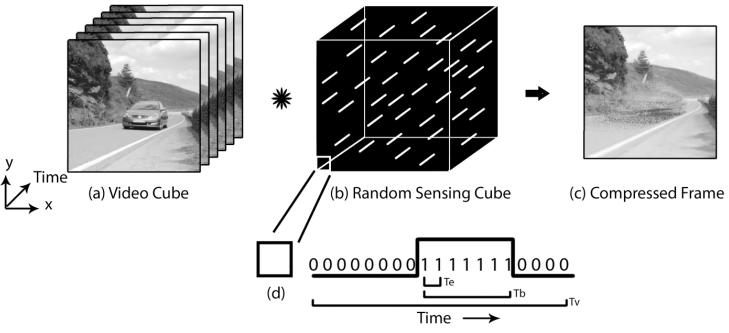

Real-Time Object Detection and Localization in Compressive Sensed Video

Sathyaprakash Narayanan*,

Yeshwanth Ravi Theja*,

Venkat Rangan,

Anirban Charkraborty,

Chetan Singh Thakur A novel task of detection and localization of objects directly on the compressed frames. Thereby mitigating the need to reconstruct the frames and reducing the search rate up to 20x (compression rate). We achieved an accuracy of 46.27% mAP with the proposed model. We were also able to show real-time inference on an NVIDIA TX2 embedded board with 45.11% mAP. |

|

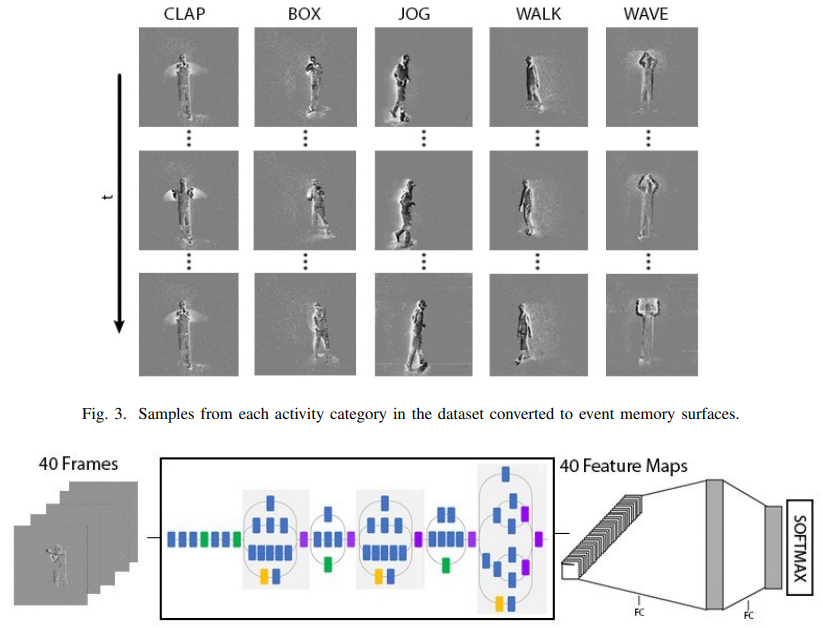

n-HAR: A Neuromorphic Event-Based Human Activity Recognition System using Memory Surfaces A system to achieve the task of human activity recognition based on the event-based camera data. We show that such tasks, which generally need high frame rate sensors for accurate predictions, can be achieved by adapting existing computer vision techniques to the spiking domain. We used event memory surfaces to make the sparse event data compatible with deep convolutional neural networks (CNNs). We also provide the community with a new dataset consisting of five categories of human activities captured in real world without any simulations. We achieved an accuracy of 94.3% using event memory surfaces on our activity recognition dataset. |

|

Real-Time Implementation of Proto-Object Based Visual Saliency Model Sathyaprakash Narayanan, Yeshwanth Ravi Theja, Jamal Lottier, Ernst Niebur, Ralph Etienne-Cummings, Chetan Singh Thakur IEEE International Symposium on Circuits and Systems (ISCAS), 2019 We will demonstrate a real-time implementation of a protoobject based neuromorphic visual saliency model on an embedded processing board. Visual saliency models are difficult to implement in hardware for real-time applications due to their computational complexity. The conventional implementation is not optimal because of the requirement of a large number of convolution operations for filtering on several feature channels across multiple image pyramids. Our current implementation considers the dynamic temporal motion change by convoluting along time efficiently by parallelly processing them. |

|

A Compressive Sensing Video dataset using Pixel-wise coded exposure Manifold amount of video data gets generated every minute as we read this document, ranging from surveillance to broadcasting purposes. There are two roadblocks that restrain us from using this data as such, first being the storage which restricts us from only storing the information based on the hardware constraints. Secondly, the computation required to process this data is highly expensive which makes it infeasible to work on them. Compressive sensing(CS) is a signal process technique, through optimization, the sparsity of a signal can be exploited to recover it from far fewer samples than required by the Shannon-Nyquist sampling theorem. There are two conditions under which recovery is possible. In this work we propose a new comprehensive video dataset, where the data is compressed using pixel-wise coded exposure that resolves various other impediments. |

|

|

|

Reviewer ISCAS 2024 |

|

Reviewer, WACV 2019, 2020, 2022, 2023, 2024

Reviewer, BMVC 2018 |

|

Reviewer, TPAMI IEEE Transactions on Pattern Analysis and Machine Intelligence

|

|

SIP Mentor 2023 |

|

Forked from Leonid Keselman's Jekyll fork of this page. |